Quantum Computers Brings Uncertainty Knocking at Our Doors

06/10/2019

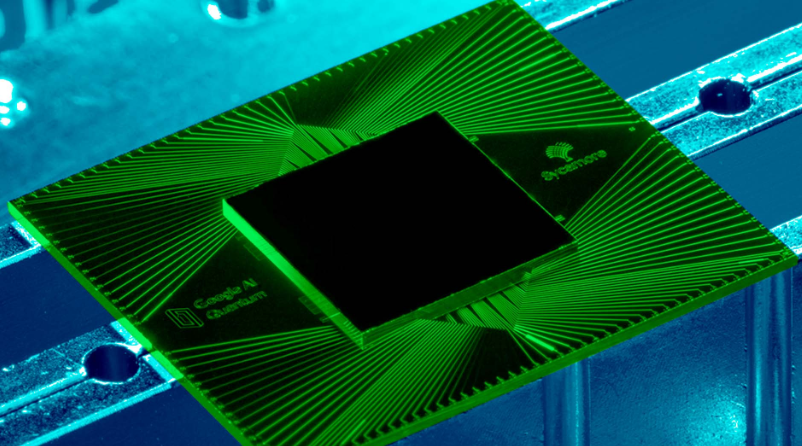

NO, quantum computing did not come of age with Google’s Sycamore, a 54-qubit computer solving a problem in 200 seconds, which would take even a super computer 10,000 years. Instead, it is the first step in showing that there is a functional computation that can be done with a quantum computer, and it does indeed solve a special class of problems much faster than a conventional computer. This is quantum supremacy, the day one quantum computer beats all classical computers in one specially constructed task.

The bad news – for the science fiction enthusiasts – is that it is not going to replace our computers but will be useful for a special class of problems. Its construction requires conditions such as super low temperatures that can be created only in a special environment. We are not going to wear it on our sleeves or use it on our cell phones. At least not yet, and not with today’s physics! And our encryption algorithms on which all our internet protocols and world’s financial transactions are based are safe, at least for now.

Nevertheless, this is a huge step forward, in which billions are being spent by nations and companies in the belief that this will open up areas of computations that have been closed for now. This is the first example of where a specific problem, even if it is a very particular specially created for quantum computers, has been solved. Such problems could be extended to the real world. They could be about property of new materials, artificial intelligence, protein chemistry, in which either systems themselves have phenomena with quantum effects or systems that deal with probabilities and therefore better simulated by quantum computers.

Of course, quantum supremacy has been watched by cryptographers with dread. All internet protocols, financial transactions in the world, blockchain based systems (example- bitcoin) works on cryptography. It is known that if quantum computers are available, all current cryptography including the popular RSA private-public key systems would be easily broken; a real nightmare for nation states and financial players who rely on such encryption systems. Of course, new systems can be created that would withstand such quantum tools, but at a huge cost. And all current encrypted information will still be broken and therefore readable.

The specific paper which has created the worldwide sensation about the fulfilment of quantum supremacy, the holy grail of quantum computing, is only a draft version which a researcher in NASA associated with Google’s research, inadvertently loaded into the NASA’a server for technical papers. As it so happened, Google Scholar, routinely scours such servers, and notified the existence of this paper to quantum researchers worldwide. It was Google that ousted Google’s unpublished paper!

The paper – Quantum supremacy using a programmable superconducting processor by Google’s AI Quantum and collaborators as authors – has been removed now from the NASA server, but is of course widely available.

Let us get back to some physics. What is a quantum computer? The possibility of a quantum computer was first proposed by Professor Richard Feynman, that a computer running on quantum principles would solve quantum world problems in physics and chemistry. That means that if we are trying to simulate quantum phenomena on machines, the only way to capture or compute the quantum world in reasonable time would be to build the machines themselves on quantum phenomena, i.e., quantum computers. At that time, it was essentially a thought experiment to show why quantum simulations on computers based on classical physics would not work on quantum physics phenomena and would take too long.

So why do the machines based on classical physics not work on quantum phenomena? Simply put, the calculations of such systems would grow exponentially with the size of the system, or the increase in time horizon for which the end state is being computed. In any case, the future states of the quantum world are probability distributions, and are captured better by quantum computers which give their results also as probability distributions.

So what is the difference between computers built on quantum principles and those on classical physics? All our computers in everyday use – classical computers in this language – the information in the system exists only in binary form, the smallest bit of information that exists is either a 0 or a 1 (False=0, True=1). The quantum computer has quantum bits – qubits – that exist in many different states simultaneously, using the quantum phenomenon of superposition. The final value of this superposition can be found only when it is measured, when it “collapses” to either a 0 or a 1. When that happens the qubit life is essentially over, it can no longer be used in further calculations.

The second difference between the classical computer bits and the qubits are that quantum phenomena has another property, that of entanglement. Each of the bits can be entangled with other bits, giving rise to many more possible states that they can be in together, and then each of them individually. In other words, the qubits can handle a much larger number of possibilities, and therefore compute certain class of problems much faster. And the beauty of such computations is that the size of the problem does not really matter, as the qubits working together crunch the bigger problems almost as fast as smaller problems.

What is the catch then and why will not all classical computers be replaced by quantum computers? Without getting into the technology and cost issues – how it requires supercooled environment, the problem of harnessing the technology, costs of all of this – let us look at the fundamental issues. A quantum computer deals with the quantum quantities, and therefore in terms of probabilities. By itself, it will not solve a given problem but provide only a probability distribution of possible results. Even more difficult, the problem itself has to be formulated in a way that can be modelled in this quantum world: only a certain class of problems can be modelled. The third is that we will need additional qubits to provide error correction; otherwise errors in the system will propagate and destroy any meaningful computation.

The Google’s exercise showed all three: it chose a problem that could be formulated in probability distribution terms, it had error correcting code, and it is now verifying that the “answer”, a probability distribution, is indeed the solution. This verification can be done using a classical computers, or in this case, a super computer.

It had been proposed that a quantum computer to be useful, would require at least 40-50 qubits, run for a minimum period and require extensive error correction. Google’s Sycamore quantum computer using 54 qubits (one bit was defective, so effectively 53 qubits) had error correction codes and ran for 200 seconds. And it solved a problem that would require a classical computer more than 10,000 years. Of course the comparison is unfair; it is like asking a bridge player to compete in marathon against a long-distance runner. But it does show that a quantum computer has passed its first test of solving a problem that would take a super computer far longer time.

So what is the use of such a machine? Let us look at the flip side. Babbage’s mechanical machines (analytical and difference engines) morphed into actual computers after we mastered the technology of vacuum tubes, and later electronic circuits. It took about a hundred years. The current qubit technologies are analogous to Babbage machines. Nor will these machines solve the same problems that we solve with our current classical computers. So the future will combine both, a combination of classical and quantum computer “chips” so that a problem can be partitioned into its classical and quantum parts.

Currently, the nation states of today have a huge stake in hack-proofing of their digital infrastructure from quantum computers. They are also looking at the future, even if it is some time away, not decades but years. No country can be left behind, at least if it has big power aspirations.

The US is currently ahead of the game, with Google, IBM, building 50-qubit architecture, and others closely behind. D-Wave has built a much larger qubit machine 4,000 qubit machine – but it has yet to solve a problem of the kind that the Google’s Sycamore has. The Chinese have a functional 24-qubit machine, and are behind the US in this aspect of the race. But they seem to have many more researchers in this area, and also a larger number of patents and research papers in major conferences and publications. Compared to that India is way behind with a 3-qubit system in TIFR and expenditure which is not even 1 per cent of the US and Chinese investments.

Google’s latest exercise has brought quantum computing – or a technology embedding uncertainty — knocking at our doors. When and how it will enter, is still to be seen. But it is no longer a question of if, but when.